This

is the 3rd article of series: Thingworx Analytics introduction.

1st

article introduces Analytics Function and Modules.

2nd

article introduces Non Time Series TTF prediction.

Environment:

Thingworx Platform 8.5, Analytics Server 8.5, Analytics Extension 8.5.

In TTF

prediction, Time Series Data model is better than Non Time Series Data model.

What’s

Time Series Data model?

As

per Machine Learning concept, the process of training model is to find the best

matching parameters, and to use them to combine with features to calculate the result

values.

For

Non Time Series Data models, it’s not relevant between current values and

previous values.

But

for Time Series Data, during each time of calculation, it will check not only

current values, but also previous values, and the previous values are time

relevant.

There’re

2 important terms here: look back size, and data sampling frequency.

Look

back size = numbers of data samples need to look back.

Data

sampling frequency = the frequency of taking a new data sample.

Look

back size * Data sampling frequency = the total numbers will be feed to run

calculation, for which I call it Look back area, the data is queried from Value

Stream, with maxItems = look back size, and startDate = current date – look back

area.

For

example, if look back size = 4, data sampling frequency = 1 minute, then look

back area = 4 minutes.

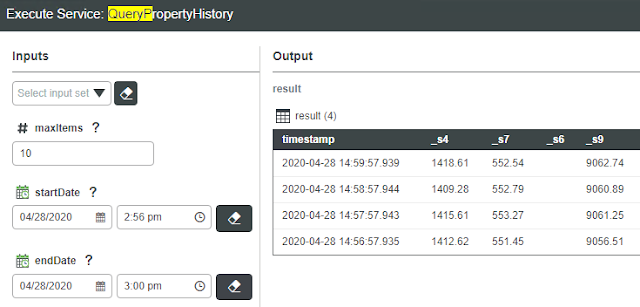

Thingworx

used Value Stream to log Time Series Data, we can run service of QueryProperyHistory

to get historic data:

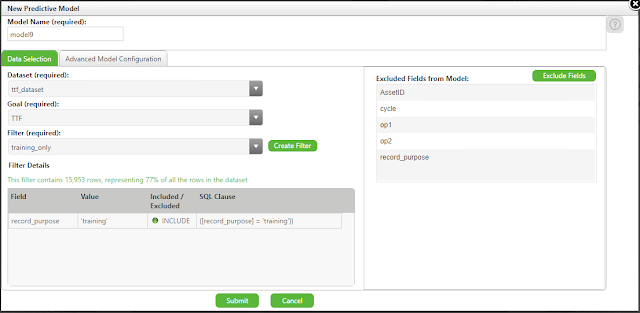

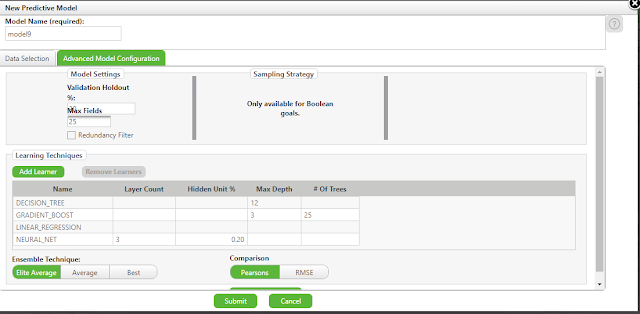

Some

other notes for TTF prediction models:

• Always

set useRedundancyFilter = true

• Always

set useGoalHistory = false

• Always

set lookahead = 1

• Training

time is much longer than Non Time Series data

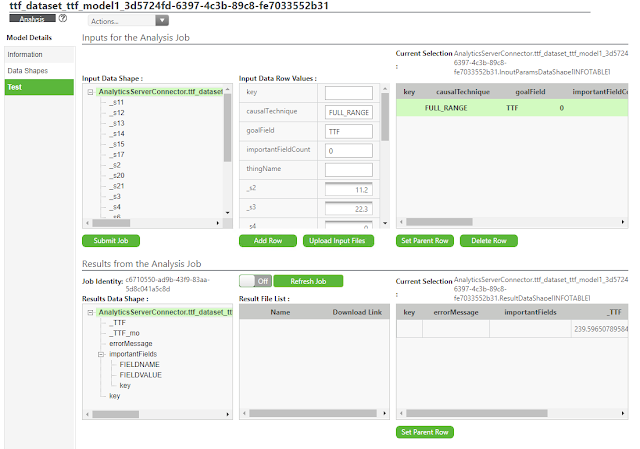

After

the model is published, test of model requires to input not only current values

of all features, but also previous values as defined in look back size, than

click Add Row to add the new record.

When

creating Analysis Events, please be noted that the Thing Properties of Inputs

Mapping should be Logged, because only Logged Properties can be queried with

historic values from Value Stream.

And

for Results Mapping, if we bind the result to Thing Property, even if that Property

is set Logged, somehow the update by Analytics will not be logged into Value

Stream. We can create another Property, and bind it with Results Mapping, and

sync it with the final Property you’re monitoring by Service or Subscription,

and Log it into Value Stream. After that, we can track all of the historic

changes with TimeSeriesChart.

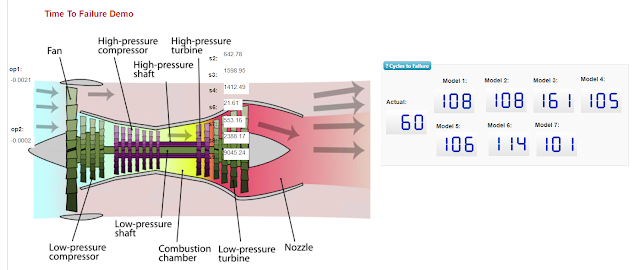

Below

charts show the comparison between Time Series Data models predicted TTFs and

actual TTFs.

We

can see that, compare to Non Time Series models, the prediction is much more

accurate, and is faster to match the actual TTF curve.

Below

table lists out the settings of all models:

Another

note, to make sure to write all features into Value Stream in same moment in

same record, we should use UpdatePropertyValues instead of SetPropertyValues.

Some

codes for reference:

----------------------------------------------------------------------

//

Use UpdatePropertyValues to make sure to update all attribute in the same

moment

var

params = {

infoTableName : "InfoTable",

dataShapeName : "NamedVTQ"

};

//

CreateInfoTableFromDataShape(infoTableName:STRING("InfoTable"),

dataShapeName:STRING):INFOTABLE(VSTestDataShape)

var

tempDable =

Resources["InfoTableFunctions"].CreateInfoTableFromDataShape(params);

var

time = new Date();

tempDable.AddRow({

time: time,

name: "F1",

quality: "GOOD",

value: 71

});

tempDable.AddRow({

time: time,

name: "F2",

quality: "GOOD",

value: 72

});

tempDable.AddRow({

time: time,

name: "F3",

quality: "GOOD",

value: 73

});

tempDable.AddRow({

time: time,

name: "F4",

quality: "GOOD",

value: 74

});

tempDable.AddRow({

time: time,

name: "F5",

quality: "GOOD",

value: 75

});

me.UpdatePropertyValues({

values: tempDable /* INFOTABLE */

});

var

result = tempDable;

----------------------------------------------------------------------